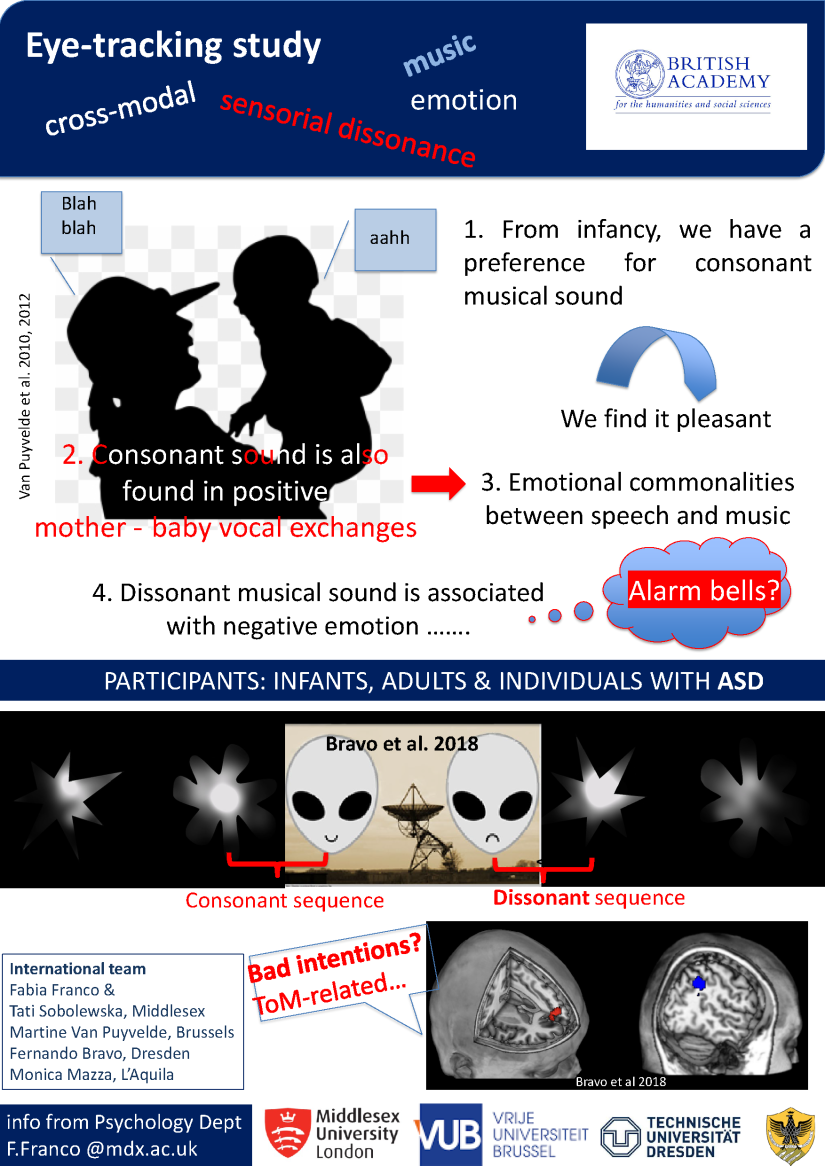

Emotional meaning of musical consonance and dissonance in cross-modal non-verbal tasks

Funded by British Academy 2017-2018

This interdisciplinary project funded by The British Academy is based and capitalises on the international collaboration of facilities and experts in developmental science, music, psychophysiology, psychoacoustics, neuroscience, electronic arts and autism. Outcomes of this project will generate further research with significant translatability for early screening and support of disorder groups.

This research hypothesised that the psychoacoustic features identifying consonance/dissonance in music may be part of a primitive affect system developed early in infancy to process communicative interactions across the human soundscape, encompassing both music and speech. Such psychoacoustic features would be used for online processing of social events as much as in new physical encounters – “is this OK?”. In a first step to test this idea, we used abstract shapes found to be associated with rounded vs. rough speech sounds and more or less negative valence by previous research, and we combined them with tone sequences falling into consonant or dissonant musical intervals. Three eye-tracking experiments based on static audiovisual displays revealed an overall preference for curvy shapes in infants younger than 6 months, which made place for more complex cross-modal processing modulated by sound in infants older than 6 months and adults. Specifically, a congruence bias emerged, with more visual attention deployed to shapes matching the valence of the sounds simultaneously heard (e.g., curvy/consonant and spiky/dissonant). An additional four experiments based on animated (rather than static) audiovisual displays of the same shapes and sounds provided further multi-methodological evidence and specification, with eye-tracking to measure implicit deployment of attention, cardiorespiratory physiology (RSA) to measure neurovisceral integration and questionnaires to measure the adult participants’ explicit judgements.

The results confirmed that musical sound does modulate inspection of associated visual material and biases attention towards shapes emotionally congruent with the sound sequences. However, the moving shapes (differently from the static shapes) increased the attentional bias in the direction of negatively-valenced congruent associations (e.g., spiky/dissonant), with this behavioural response corresponding to depressed physiological regulation – a pattern of response that can be linked to increased anxiety. Developments of the research are underway to extend the study to individuals with ASD (autistic spectrum disorder). In the course of the project also an fMRI paradigm with three novel audiovisual tasks has been fully developed and piloted to investigate the psychological functions and the neural substrates associated with emotion recognition and multimodal information integration. Abnormal functioning of the integral brain circuitry subserving these processes is thought to be a potential source underlying the observed social cognition impairments in ASD.

PRINCIPAL INVESTIGATOR: Dr Fabia Franco (PI), Middlesex University London (UK)

Co-Investigators:

Dr Fernando Bravo, Centre for Music Cognition, Dresden University (Germany)

Dr Monica Mazza, Dept Clinical Medicine and Biotechnology, L’Aquila University (Italy)

Dr Martine Van Puyvelde, Royal Military Academy and Free University of Brussels (Belgium)

Research Assistant: Tatiana Sobolewska, Middlesex University London (UK)